As we’ve emphasized from the beginning, MIDI is a symbolic encoding of messages. These messages have a standard way of being interpreted, so you have some assurance that your MIDI file generates a similar performance no matter where it’s played in the sense that the instruments played are standardized. How “good” or “authentic” those instruments sound all comes down to the synthesizer and the way it creates sounds in response to the messages you’ve recorded.

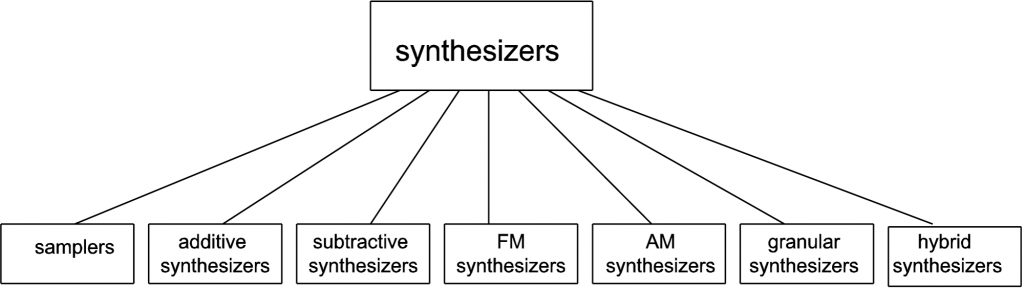

We find it convenient to define synthesizer as any hardware or software system that generates sound electronically based on user input. Some sources distinguish between samplers and synthesizers, defining the latter as devices that use subtractive, additive AM, FM, or some other method of synthesis as opposed to having recourse to stored “banks” of samples. Our usage of the term is diagrammed in Figure 6.17.

A sampler is a hardware or software device that can store large numbers of sound clips for different notes played on different instruments. These clips are called samples (a different use from this term, to be distinguished from individual digital audio samples). A repertoire of samples stored in memory is called a sample bank. When you play a MIDI data stream via a sampler, these samples are pulled out of memory and played – a C on a piano, an F on a cello, or whatever is asked for in the MIDI messages. Because the sounds played are actual recordings of musical instruments, they sound realistic.

The NN-XT sampler from Reason is pictured in Figure 6.18. You can see that there are WAV files for piano notes, but there isn’t a WAV file for every single note on the keyboard. In a method called multisampling, one audio sample can be used to create the sound of a number of neighboring ones. The notes covered by a single audio sample constitute a zone. The sampler is able to use a single sample for multiple notes by pitch-shifting the sample up or down by an appropriate number of semitones. The pitch can’t be stretched too far, however, without eventually distorting the timbre and amplitude envelope of the note such that the note no longer sounds like the instrument and frequency it’s supposed to be. Higher and lower notes can be stretched more without our minding it, since our ears are less sensitive in these areas.

There can be more than one audio sample associated with a single note, also. For example, a single note can be represented by three samples where notes are played at three different velocities – high, medium, and low. The same note has a different timbre and amplitude envelope depending on the velocity with which it is played, so having more than one sample for a note results in more realistic sounds.

Samplers can also be used for sounds that aren’t necessarily recreations of traditional instruments. It’s possible to assign whatever sound file you want to the notes on the keyboard. You can create your own entirely new sound bank, or you can purchase additional sound libraries and install them (depending on the features offered by your sampler). Sample libraries come in a variety of formats. Some contain raw audio WAV or AIFF files which have to be mapped individually to keys. Others are in special sampler file formats that are compressed and automatically installable.

[aside]Even the term analog synthesizer can be deceiving. In some sources, an analog synthesizer is a device that uses analog circuits to generate sound electronically. But in other sources, an analog synthesizer is a digital device that emulates good old fashioned analog synthesis in an attempt to get some of the “warm” sounds that analog synthesis provides. The Subtractor Polyphonic Synthesizer from Reason is described as an analog synthesizer, although it processes sound digitally.[/aside]

A synthesizer, if you use this word in the strictest sense, doesn’t have a huge memory bank of samples. Instead, it creates sound more dynamically. It could do this by beginning with basic waveforms like sawtooth, triangle, or square waves and performing mathematical operations on them to alter their shapes. The user controls this process by knobs, dials, sliders, and other input controls on the control surface of the synthesizer – whether this is a hardware synthesizer or a soft synth. Under the hood, a synthesizer could be using a variety of mathematical methods, including additive, subtractive, FM, AM, or wavetable synthesis, or physical modeling. We’ll examine some of these methods in more detail in Section 6.3.1. This method of creating sounds may not result in making the exact sounds of a musical instrument. Musical instruments are complex structures, and it’s difficult to model their timbre and amplitude envelopes exactly. However, synthesizers can create novel sounds that we don’t often, if ever, encounter in nature or music, offering creative possibilities to innovative composers. The Subtractive Polyphonic Synthesizer from Reason is pictured in Figure 6.19.

[wpfilebase tag=file id=24 tpl=supplement /]

In reality, there’s a good deal of overlap between these two ways of handling sound synthesis. Many samplers allow you to manipulate the samples with methods and parameter settings similar to those in a synthesizer. And, similar to a sampler, a, synthesizer doesn’t necessarily start from nothing. It generally has basic patches (settings) that serve as a starting point, prescribing, for example, the initial waveform and how it should be shaped. That patch is loaded in, and the user can make changes from there. You can see that both devices pictured allow the user to manipulate the amplitude envelope (the ADSR settings), apply modulation, use low frequency oscillators (LFOs), and so forth. The possibilities seem endless with both types of sound synthesis devices.

[separator top=”0″ bottom=”1″ style=”none”]

1 comment

Comments are closed.