8.2.2.1 Capturing the Four-Dimensional Sound Field

When listening to sound in an acoustic space, such as at a live orchestral concert, you hear different sounds arriving from many directions. The various instruments are spread out on a stage, and their sound arrives at your ears somewhat spread out in time and direction according to the physical location of the instruments. You also hear subtly nuanced copies of the instrument sounds as they’re reflected from the room surfaces at even more varying times and directions. The audience makes their own sound in applause, conversation, shuffling in seats, cell phones going off, etc. These sounds arrive from different directions as well. Our ability to perceive this four-dimensional effect is the result of the physical characteristics of our hearing system. With two ears, the differences in arrival time and intensity between them allow us to perceive sounds coming from many different directions. Capturing this effect with audio equipment and then either reinforcing the live audio or recreating the effect upon playback is quite challenging.

The biggest obstacle is the microphone. A traditional microphone records the sound pressure amplitude at a single point in space. All the various sound waves arriving from different directions at different times are merged into a single electrical voltage wave on a wire. With all the data merged into a single audio signal, much of the four-dimensional acoustic information is lost. When you play that recorded sound out of a loudspeaker, all the reproduced sounds are now coming from a single direction as well. Adding more loudspeakers doesn’t solve the problem because then you just have every sound repeated identically from every direction, and the precedence effect will simply kick in and tell our brain that the sound is only coming from the lone source that hits our ears first.

[aside]If the instruments are all acoustically isolated, the musicians may have a hard time hearing themselves and each other. This poses a significant obstacle, as they will have a difficult time trying to play together. To address this problem, you have to set up a complicated monitoring system. Typically each musician has a set of headphones that feeds him or her a custom mix of the sounds from each mic/instrument.[/aside]

The first step in addressing some of these problems is to start using more than one microphone. Stereo is the most common recording and playback technique. Stereo is an entirely man-made effect, but produces a more dynamic effect upon playback of the recorded material with only one additional loudspeaker. The basic idea is that since we have two ears, two loudspeakers should be sufficient to reproduce some of the four-dimensional effects of acoustic sound. It’s important to understand that there is no such thing as stereo sound in an acoustic space. You can’t make a stereo recording of a natural sound. When recording sound that will be played back in stereo, the most common strategy is recording each sound source with a dedicated microphone that is as acoustically isolated as possible from the other sound sources and microphones. For example, if you were trying to record a simple rock band, you would put a microphone on each drum in the drum kit as close to the drum as possible. For the electric bass, you would put a microphone as close as possible to the amplifier and probably use a hardwired cable from the instrument itself. This gives you two signals to work with for that instrument. You would do the same for the guitar. If possible, you might even isolate the bass amplifier and the guitar amplifier inside acoustically sealed boxes or rooms to keep their sound from bleeding into the other microphones. The singer would also be isolated in a separate room with a dedicated microphone.

During the recording process, the signal from each microphone is recorded on a separate track in the DAW software and written to a separate audio file on the hard drive. With an isolated recording of each instrument, a mix can be created that distributes the sound of each instrument between two channels of audio that are routed to the left and right stereo loudspeaker. To the listener sitting between the two loudspeakers, a sound that is found only on the left channel sounds like it comes from the left of the listener and vice versa for the right channel. A sound mixed equally into both channels appears to the listener as though the sound is coming from an invisible loudspeaker directly in the middle. This is called the phantom center channel. By adjusting the balance between the two channels, you can place sounds at various locations in the phantom image between the two loudspeakers. This flexibility in mixing is possible only because each instrument was recorded in isolation. This stereo mixing effect is very popular and produces acceptable results for most listeners.

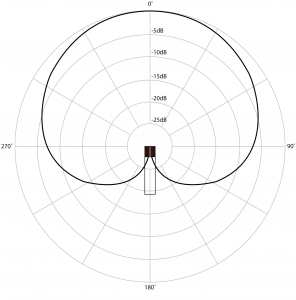

When recording in a situation where it’s not practical to use multiple microphones in isolation – such as for a live performance or a location recording where you’re capturing an environmental sound – it’s still possible to capture the sound in a way that creates a stereo-like effect. This is typically done using two microphones and manipulating the way the pickup patterns of the microphones overlap. Figure 8.5 shows a polar plot for a cardioid microphone. Recall that a cardioid microphone is a directional microphone that picks up the sound very well on-axis with the front of the microphone but doesn’t pick up the sound as well off-axis. This polar plot shows only one plotted line, representing the pickup pattern for a specific frequency (usually 1 kHz), but keep in mind that the directivity of the microphone changes slightly for different frequencies. Lower frequencies are less directional and higher frequencies are more directional than what is shown in Figure 8.5. With that in mind, we can examine the plot for this frequency to get an idea of how the microphone responds to sounds from varying directions. Our reference level is taken at 0° (directly on-axis). The dark black line representing the relative pickup level of the microphone intersects with the 0 dB line at 0°. As you move off-axis, the sensitivity of the microphone changes. At around 75°, the line intersects with the -5 dB point on the graph, meaning that at that angle, the microphone picks up the sound 5 dB quieter than it does on-axis. As you move to around 120°, the microphone now picks up the sound 15 dB quieter than the on-axis level. At 180° the level is null.

One strategy for recording sound with a stereo effect is to use an XY cross pair. The technique works by taking two matched cardioid microphones and positioning them so the microphone capsules line up horizontally at 45° angles that cross over the on-axis point of the opposite microphone. Getting the capsules to line up horizontally is very important because you want the sound from every direction to arrive at both microphones at the same time and therefore in the same phase.

[aside]At 0° on-axis to the XY pair, the individual microphone elements are still tilted 45°, making the microphone’s pickup a few dB quieter than its own on-axis level would be. Yet because the sound arrives at both microphones at the same level and the same phase, the sound is perfectly reinforced, causing a boost in amplitude. In this case the result is actually slightly louder than the on-axis level of either individual microphone.[/aside]

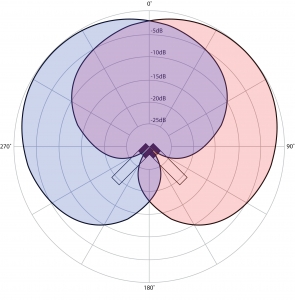

Figure 8.6 shows a recording device with integrated XY cross pair microphones, and Figure 8.7 shows the polar patterns of both microphones when used in this configuration. The signals of these two microphones are recorded onto separate tracks and then routed to separate loudspeakers for playback. The stereo effect happens when these two signals combine in the air from the loudspeakers. Let’s first examine the audio signals that are unique to the left and right channels. For a sound that arrives at the microphones 90° off-axis, there is approximately a 15 dB difference in level for that sound captured between the two microphones. As a rule of thumb, whenever you have a level difference that is 10 dB or greater between two similar sounds, the louder sound takes precedence. Consequently, when that sound is played back through the two loudspeakers, it is perceived as though it’s entirely located at the right loudspeaker. Likewise, a sound arriving 270° off-axis sounds as though it’s located entirely at the left loudspeaker. At 0°, the sound arrives at both microphones at the same level. Because the sound is at an equal level in both microphones, and therefore is played back equally loud through both loudspeakers, it sounds to the listener as if it’s coming from the phantom center image of the stereo field. At 45°, the polar plots tell us that the sound arrives at the right microphone approximately 7 dB louder than at the left. Since this is within the 10 dB range for perception, the level in the left channel causes the stereo image of the sound to be pulled slightly over from the right channel, now seeming to come from somewhere between the right speaker and the phantom center location. If the microphones are placed appropriately relative to the sound being recorded, this technique can provide a fairly effective stereo image without requiring any additional mixing or panning.

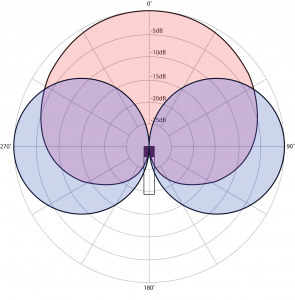

Another technique for recording a live sound for a stereo effect is called mid-side. Mid-side also uses two microphones, but unlike XY, one microphone is a cardioid microphone and the other is a bidirectional or figure-eight microphone. The cardioid microphone is called the mid microphone and is pointed forward (on-axis), and the figure-eight microphone is called the side microphone and is pointed perpendicular to the mid microphone. Figure 8.8 shows the polar patterns of these two microphones in a mid-side configuration.

The side microphone has a single diaphragm that responds to pressure changes on either side of the microphone. The important thing to understand here is that because of the single diaphragm, the sounds on either side of the microphone are captured in opposite polarity. That is, a sound that causes a positive impulse on the right of the microphone causes a negative impulse on the left of the microphone. It is this polarity effect of the figure-eight microphone that allows the mid-side technique to work. After you’ve recorded the signal from these two microphones onto separate channels, you have to set up a mid-side matrix decoder in your mixing console or DAW software in order to create the stereo mix. To create a mid-side matrix, you take the audio from the mid microphone and route it to both left and right output channels (pan center). The audio from the side microphone gets split two ways. First it gets sent to the left channel (pan left). Then it gets sent also to the right channel (pan right) with the polarity inverted. Figure 8.9 shows a mid-side matrix setup in Logic. The “Gain” plugin inserted on the “Side -” track is being used only to invert the polarity (erroneously labeled “Phase Invert” in the plug-in interface).

Through the constructive and destructive combinations of the mid and side signals at varying angles, this matrix creates a stereo effect at its output. The center image is essentially derived from the on-axis response of the mid microphone, which by design happens also to be the off-axis point of the side microphone. Any sound that arrives at 0° to the mid microphone is added to both the left and right channels without any interaction from the signal from the side microphone, since at 0° to the mid-side setup the side microphone pickup is null. If you look at the polar plot, you can see that the mid microphone picks up every sound within a 120° spread with only 6 dB or so of variation in level. Aside from this slight level difference, the mid microphone doesn’t contain any information that can alone be used to determine a sound’s placement in the stereo field. However, approaching the 300° point (arriving more from the left of the mid-side setup), you can see that the sound arriving at the mid microphone is also picked up by the side microphone at the same level and the same polarity. Similarly, a sound that arrives at 60° also arrives at the side microphone at the same level as the mid, but this time it is inverted in polarity from the signal at the mid microphone. If you look at how these two signals combine, you can see that the mid sound at 300° mixes together with the “Side +” track and, because it is the same polarity, it reinforces in level. That same sound mixes together with the “Side -” track and cancels out because of the polarity inversion. The sound that arrives from the left of the mid-side setup therefore is louder on the left channel and accordingly appears to come from the left side of the stereo field upon playback. Conversely, a sound coming from the right side at 60° reinforces when mixed with the “Side-“ track but cancels out when mixed with the “Side+” track, and the matrixed result is louder in the right channel and accordingly appears to come from the right of the stereo field. Sounds that arrive between 0° and 300° or 0° and 60°have a more moderate reinforcing and canceling effect, and the resulting sound appears at some varying degree between left, right, and center depending on the specific angle. This creates the perception of sound that is spread between the two channels in the stereo image.

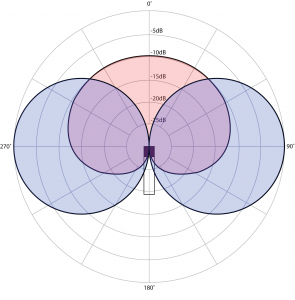

The result here is quite similar to the XY cross pair technique with one significant difference. Adjusting the relative level of the “Mid” track alters the spread of the stereo image. Figure 8.10 shows a mid-side polar pattern with the mid microphone attenuated -10 dB. Notice that the angle where the two microphones pick up the sound at equal levels has narrowed to 45° and 315°. This means that when they are mixed together in the mid-side matrix, a smaller range of sounds are mixed equally into both left and right channels. This effectively widens the stereo image. Conversely, increasing the level of the mid microphone relative to the side microphone causes more sounds to be mixed into the left and right channels equally, thereby narrowing the stereo image. Unlike the XY cross pair, with mid-side the stereo image can be easily manipulated after the recording has already been made.

The concept behind mid-side recording can be expanded in a number of ways to allow recordings to capture sound in many directions while still maintaining the ability to recreate the desired directional information on playback. One example is shown in Figure 8.11. This microphone from the Soundfield Company has four microphone capsules in a tetrahedral arrangement, each pointing a different direction. Using proprietary matrix processing, the four audio signals captured from this microphone can be combined to generate a mono, stereo, mid-side, four-channel surround, five-channel surround, or even a seven-channel surround signal.

The counterpart to setting up microphones for stereo recording is setting up loudspeakers for stereo listening. Thus, we touch on the mid-side technique in the context of loudspeakers in Section 8.2.4.5.

The most simplistic (and arguably the most effective) method for capturing four-dimensional sound is binaural recording. It’s quite phenomenal that despite having only two transducers in our hearing system (our ears), we are somehow able to hear and perceive sounds from all directions. So instead of using complicated setups with multiple microphones, just by putting two microphones inside the ears of a real human, you can capture exactly what the two ears are hearing. This method of capture inherently includes all of the complex inter-aural time and intensity difference information caused by the physical location of the ears and the human head that allows the brain to decode and perceive the direction of the sound. If this recorded sound is then played back through headphones, the listener perceives the sound almost exactly as it was perceived by the listener in the original recording. While wearable headphone-style binaural microphone setups exist, sticking small microphones inside the ears of a real human is not always practical, and an acceptable compromise is to use a binaural dummy head microphone. A dummy head microphone is essentially the plastic head of a mannequin with molds of a real human ear on either side of the head. Inside each of these prosthetic ears is a small microphone, the two together capturing a binaural recording. Figure 8.12 shows a commercially available dummy head microphone from Neumann.

With binaural recording, the results are quite effective. All the level, phase, and frequency response information of the sound arriving at both ears individually that allows us to perceive sound is maintained in the recording. The real limitation here is that the effect is largely lost when the sound is played through loudspeakers. The inter-aural isolation provided by headphones is required when listening to binaural recordings in order to get the full effect.

[wpfilebase tag=file id=125 tpl=supplement /]

A few algorithms have been developed that mimic the binaural localization effect. These algorithms have been implemented into binaural panning plug-ins that are available for use in many DAW software programs, allowing you to artificially create binaural effects without requiring the dummy head recordings. An example of a binaural panning plug-in is shown in Figure 8.13. One algorithm is called the Cetera algorithm and is owned by the Starkey hearing aid company. They use the algorithm in their hearing aids to help the reinforced sound from a hearing aid sound more like the natural response of the ear. Starkey created a demo of their algorithm called the Starkey Virtual Barbershop. Although this recording sounds like it was captured with a binaural recording system, the binaural localization effects are actually rendered on a computer using the Cetera algorithm.

8.2.2.2 Setting Levels for Recording

Before you actually hit the “record” button you’ll want to verify that all your input levels are correct. The goal is to adjust the microphone preamplifiers so the signal from each microphone is coming into the digital converters at the highest voltage possible without clipping. Start by record-enabling the track in your software and then have the performer do the loudest part of his or her performance. Adjust the preamplifier so that this level is at least 6 dB below clipping. This way when the performer sings or speaks louder, you can avoid a clip. While recording, keep an eye on the input meters for each track and make sure nothing clips. If you do get a clip, at the very least you need to reduce the input gain on the preamplifier. You may also need to redo that part of the recording if the clip was bad enough to cause audible distortion.

8.2.2.3 Multitrack Recording

As you learned in previous chapters, today’s recording studios are equipped with powerful multitrack recording software that allows you to record different voices and instruments on different tracks. This environment requires that you make choices about what elements should be recorded at the same. For example, if you’re recording music using real instruments, you need to decide whether to record everything at the same time or record each instrument separately. The advantage to recording everything at the same time is that the musicians can play together and feel their way through the song. Musicians usually prefer this, and you almost always get a better performance when they play together. The downside to this method is that with all the musicians playing in the same room, it’s difficult to get good isolation between the instruments in the recording. Unless you’re very careful, you’ll pick up the sound of the drums on the vocalist’s microphone. This is hard to fix in post-production, because if you want the vocals louder in the mix, the drums get louder, also.

If you decide to record each track separately, your biggest problem is going to be synchronization. If all the various voices and instruments are not playing together, they will not be very well synchronized. They will start and end their notes at slightly different times, they may not blend very well, and they may not all play at the same tempo. The first thing you need to do to combat this problem is to make sure the performer can hear the other tracks that have already been recorded. Usually this can be accomplished by giving the performer a set of headphones to wear that are connected to your audio interface. The first track you record will set the standard for tempo, duration, etc. If the subsequent tracks can be recorded while the performer listens to the previous ones, the original track can set the tempo. Depending on what you are recording, you may also be able to provide a metronome or click track for the performers to hear while they perform their track. Most recording software includes a metronome or click track feature. Even if you use good monitoring and have the performers follow a metronome, there will still be synchronization issues. You may need to have them do certain parts several times until you get one that times out correctly. You may also have to manipulate the timing after the fact in the editing process.

Figure 8.14 shows a view from the mixing console during a recording session for a film score. You can see through the window into the stage where the orchestra is playing. Notice that the conductor and other musicians are wearing headphones to allow them to hear each other and possibly even the metronome associated with the beat map indicated by the timing view on the overhead screen. These are all strategies for avoiding synchronization issues with large multi-track recordings.

Another issue you will encounter in nearly every recording is that performers will make mistakes. Often the performer will need to make several attempts at a given performance before an acceptable performance is recorded. These multiple attempts are called takes, and each take represents a separate recording of the performer attempting the same performance. In some cases a given take may be mostly acceptable, but there was one small mistake. Instead of doing an entire new take, you can just re-record that short period of the performance that contained the mistake. This is called punch-in recording.

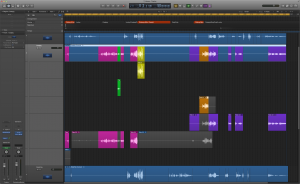

To make a punch-in recording you set up a start and stop time marker and start playback on the session. The performer hears the preceding several seconds and starts singing along with the recording. When the timeline encounters the start marker, the software starts writing the recording to the track and then reverts back to playback mode when the stop marker is reached. In the end you will have several takes and punch-ins folded into a single track. This is called a composite track, usually abbreviated to comp. A comp track allows you to unfold the track to see all the various takes. You can then go through them and select all the parts you want to keep from each take. A composite single version is created from all the takes you select. Figure 8.15 shows a multi-track recording session in Logic that uses a composite track that has been unfolded to show each take. The comp track is at the top and is color-coded to indicate which take was used for each period of time on the track.

8.2.2.4 Recording Sound for Film or Video

Production audio for film or video refers to the sound captured during the production process – when the film or video is actually being shot. In production, there may be various sounds you’re trying to capture – the voices of the actors, environmental sounds, sounds of props, or other scenic elements. When recording in a controlled sound stage studio, you generally can capture the production audio with reasonably good quality, but when recording on location you constantly have to battle background noise. The challenge in either situation is to capture the sounds you need without capturing the sounds you don’t need.

All the same rules apply in this situation as in other recording situations. You need good quality microphones, and you need to get them as close as possible to the thing you’re trying to record. Microphone placement can be challenging in a production environment where high definition cameras are close up on actors, capturing a lot of detail. Typically the microphone needs to be invisible or out of the camera shot. Actors can wear wireless lavaliere microphones as long as they can be hidden under some clothing. This placement affects the quality of the sound being picked up by the microphone, but in the production environment compromises are a necessity. The primary emphasis is of course on capturing the things that would be impossible or expensive to change in post-production, like performances or action sequences. For example, if you don’t get the perfect performance from the actors, or the scenery falls down, it’s very difficult to fix the problem without completely repeating the entire production process. On the other hand, if the production audio is not captured with a high enough quality, the actor can be brought back in alone to re-record the audio without having to reshoot the video. The bottom line is that in the production environment, the picture and the performance are the most important things. Capturing the production audio is ultimately of less importance because it’s easier to fix in post-production.

With this in mind, most production audio engineers are willing to make some compromises by putting microphones under the clothing. The other option is to mount a small directional microphone to a long pole called a boom pole. Someone outside the camera shot holds the pole, and he or she can get the microphone fairly close to actors or objects without getting the microphone in the shot. Because reshooting is so expensive, the most important job of the boom pole operator is to keep the microphone out of the shot. Picking up usable production audio is secondary.

Musical scores are another major element in film and video. Composers typically work first on developing a few musical themes to be used throughout the film while the editing process is still happening. Once a full edit is completed, the composer takes the themes and creates versions of various lengths to fit with the timing of the edited scenes. Sometimes this is done entirely with electronic instruments directly in the DAW with the video imported to the project file for a visual reference. Other times, a recording session is conducted where an orchestra is brought into a recording studio called a scoring stage. The film is projected in the studio and the composer along with a conductor performs the musical passages for the film using clicks and streamers to help synchronize the important moments.

A final challenge to be mentioned in the context of film and video is the synchronization of sound and picture. The audio is typically captured on a completely different recording medium than the video or film, and the two are put back together in post-production. In the analog domain this can be a very tricky process. The early attempt at facilitating synchronization was the clapboard slate. The slate has an area where you can write the name of the show, the scene number, and the take number. There is also a block of wood connected to the slate with a hinge. This block of wood can be raised and lowered quickly onto the slate to make a loud clap sound. The person holding the slate reads out loud the information written on the slate while holding the slate in front of the camera and then drops the clapper. In post-production the slate information can be seen on the film and heard on the audio recording. This way you know that you have the correct audio recording with the correct video. The clap sound can easily be heard on the audio recording, and on the video you can easily see the moment that the clapper closes. The editor can line up the sound of the clap with the image of the clapper closing, and then everything after that is in sync. This simple and low-tech solution has proven to be quite effective and is still used in modern filmmaking along with other improvements in synchronization technology.

A time code format called SMPTE has been developed to address the issue of synchronization. The format of SMPTE time code is described in Chapter 6. The idea behind time code synchronization is that the film has a built-in measuring system. There are 24 frames or still pictures every second in traditional motion picture film, with each frame being easily identified. The problem is that on an audiotape there is no inherent way to know which part of audio goes with which frame of video. Part of the SMPTE time code specification includes a method of encoding the time code into an audio signal that can be recorded on a separate track of audio on the tape recorder. This way, the entire audio recording is linked to each frame of the video. In the digital domain, this time code can be encoded into the video signal as well as the audio signal, and the computer can keep everything in sync. The slate clapper has even been updated to display the current time code value to facilitate synchronization in post-production.

8.2.2.5 Sound Effects

Sound effects are important components in film, video, and theatre productions. Sound effects are sometimes referred to as Foley sound, named after Jack Foley, who did the original work on sound effect techniques in the early days of silent films. Foley artists are a special breed of filmmaker who create all the sound effects for a film manually in a recording session. Foley stages are recording studios with all kinds of toys, floor surfaces, and other gadgets that make various sounds. The Foley artists go into the stage and watch the film while performing all the sounds required, the sounds ranging from footsteps and turning doorknobs to guns, rain, and other environmental sounds. The process is a lot of fun, and some people build entire careers as Foley artists.

[wpfilebase tag=file id=144 tpl=supplement /]

The first step in the creation of sound effects is acquiring some source material to work with. Commercial sound effect libraries are available for purchase, and there are some online sources for free sound effects, but the free sources are often of inconsistent quality. Sometimes you may need to go out and record your own source material. The goal here is not necessarily to find the exact sound you are looking for. Instead, you can try to find source material that has some of the characteristics of the sound. Then you can edit, mix and process the source material to achieve a more exact result. There are a few common tools used to transform your source sound. One of the most useful is pitch shifting. Spaghetti noodles breaking can sound like a tree falling when pitched down an octave or two. When using pitch shift, you will get the most dramatic transformative results when using a pitch shifter that does not attempt to maintain the original timing. In other words, when a sound is pitched up, it should also speed up. Pitch shifting in sound effect creation is demonstrated in the video associated with this section.

Another strategy is to mix several sounds together in a way that creates an entirely new sound. If you can break down the sound you are looking for into descriptive layers, you can then find source material for each of those layers and mix them all together. For example, you would never be able to record the roar of a Tyrannosaurus Rex, but if you can describe the sound you’re looking for, perhaps as something between an elephant trumpeting and a lion roaring, you’re well on your way to finding that source material and creating that new, hybrid sound.

Sometimes reverb or EQ can help achieve the sound you are looking for. If you have a vocal recording that you want to sound like it is coming from an old answering machine, using an EQ to filter out the low and high frequencies but enhance the mid frequencies can mimic the sound of the small loudspeakers in those machines. Making something sound farther away can be accomplished by reducing the high frequencies with an EQ to mimic the effect of the high frequency loss over distance, and some reverb can mimic the effect of the sound reflecting from several surfaces during its long trip.

8.2.2.6 MIDI and Other Digital Tools for Sound and Music Composition

In this section, we introduce some of the possibilities for sound music creation that today’s software tools offer, even to someone not formally educated as a composer. We include this section not to suggest that MIDI-generated music is a perfectly good substitute for music composed by classically trained musicians. However, computer-generated music can sometimes be appropriate for a project for reasons having to do with time, budget, resources, or style. Sound designers for film or theatre not infrequently take advantage of MIDI synthesizers and samplers as they create their soundscapes. Sometimes, a segment of music can be “sketched in” by the sound designer with MIDI sequencers, and this “rough draft” can serve as scratch or temp music in a production or film. In this section, we’ll demonstrate the functionality of digital music tools and show you how to get the most out of them for music composition.

Traditionally, composing is a process of conceiving of melodies and harmonies and putting them down on paper, called a musical score. Many new MIDI and other digital tools have been created to help streamline and improve the scoring process – Finale, Sibelius, or the open source MuseScore, to name a few. Written musical scores can be played by live musicians either as part of a performance or during a recording session. But today’s scoring software gives you even more options, allowing you to play back the score directly from the computer, interpreting the score as MIDI messages and generating the music through samplers and synthesizers. Scoring software also allows you to create audio data from the MIDI score and save it to a permanent file. Most software also lets you export your musical score as individual MIDI tracks, which can be then imported, arranged, and mixed in your DAW.

The musical quality of the audio that is generated from scoring software is dependent not only on the quality samplers and synthesizers used, but also on the scoring performance data that you include – marking for dynamics, articulation, and so forth. Although the amount of musical control and intuitiveness within scoring software continues to improve, we can’t really expect the software to interpret allegro con moto, or even a crescendo or fermata, the way an actual musician would. If not handled carefully, computer-generated music can sound very “canned” and mechanical. Still, it’s possible to use your digital tools to create scratch or temp music for a production or film, providing a pretty good sense of the composition to collaborators, musicians, and audiences until the final score is ready to be produced. There are also a number of ways to tweak and improve computer-generated music. If you learn to use your tools well, you can achieve surprisingly good results.

As mentioned, the quality of the sound you generate depends in large part on your sample playback system. The system could be the firmware on a hardware device, a standalone software application, or a software plug-in that runs within your DAW. A basic sampler plays back a specific sound according to a received trigger – e.g., a key pressed on the MIDI keyboard or note data received from a music scoring application. Basic samplers may not even play a unique sound for each different key, but instead they mathematically shape one sample file to produce multiple notes (as described in Chapter 7), where multiple variations of a sample or note are played back depending, for example, on how hard/loud a note is played. These samplers may also utilize other performance parameters and data to manipulate the sound for a more dynamic, realistic feel. For instance, the mod wheel can be linked to an LFO that imparts a controllable vibrato characteristic to the sound sample. While these sampler features greatly improve the realism of sample playback, the most powerful samplers go far beyond this.

Another potent feature provided by some samplers is the round robin. Suppose you play two of the same notes on a real instrument. Although both notes may have the same instrument and pitch and essentially the same force, in “real life” the two notes never sound exactly the same. With round robin, more than one version of a note is available for each instrument and velocity. The sampler automatically cycles playback through this a set of similar samples so that no two consecutive “identical” notes sound exactly the same. In order to have round robin capability, the sampler has to have multiple takes of the audio samples for every note, and for multisampled sounds this means multiple takes for every velocity as well. The number of round robin sound samples included varies from two to five, depending on the system. This duplication of samples obviously increases the memory requirements for the sampler. Some sampler systems instead use subtle processing to vary the way a sample sounds each time it is played back, simulating the round robin effect without the need for additional samples, but this may not achieve quite the same realism.

[wpfilebase tag=file id=157 tpl=supplement /]

Another feature of high-end sampler instruments and sampler systems is multiple articulations. Consider the sound of a guitar, which has a different timbre depending if it’s played with a pick, plucked with a finger, palm-muted, or hammered on. Classical stringed instruments have even more articulations than do guitars. Rather than having a separate sampler instrument for each articulation, all of these articulations can be layered into one instrument, yet with the ability for you to maintain individual control. Typically the sampler has a number of keyswitches that switch between the articulations. These keyswitches are keys on the keyboard that are perhaps above or below the instrument’s musical range. Pressing the key sets the articulation for notes to be played subsequently.

An example of keyswitches on a sampler can be seen in Figure 8.16. Some sampler systems even have intelligent playback that switch articulations automatically depending on how notes overlap or are played in relation to each other. The more articulations the system has, the better for getting realistic sound and range from an instrument,. However, knowing how and when to employ those articulations is often limited by your familiarity with the particular instrument, so musical experience plays an important role here.

[aside]In addition to the round-robin and multiple articulation samples, some instruments also include release samples, such as the subtle sound of a string being released or muted, which are played back when a note is released to help give it a natural and realistic ending. Even these release samples could have round-robin variations of their own![/aside]

As you can see, realistic computer-generated music depends not only on the quality, but also the quantity and diversity of the content to play back. Realistic virtual instruments demand powerful and intelligent sampler playback systems, not to mention the computer hardware specs to support them. One company’s upright bass instrument alone contains over 21,000 samples. For optimum performance, some of these larger sampler systems even allow networked playback, out-sourcing the CPU and memory load of samples to other dedicated computers.

With the power and scope of the virtual instruments emerging today, it’s possible to produce full orchestral scores from your DAW. As nice as these virtual instruments are, they will only get you so far without additional production skills that help you get the most out of your digital compositions.

With the complexity of and diversity of the instruments that you’ll attempt to perform on a simple MIDI keyboard, it may take several passes at individual parts and sections to capture a performance you’re happy with. With MIDI, of course, merging performances and editing out mistakes is a simple task. It doesn’t require dozens of layers of audio files, messy crossfades, and the inherent issues of recorded audio. You can do as many takes as necessary and break down the parts in whatever way that helps to improve the performance.

[aside]In addition to the round-robin and multiple articulation samples, some instruments also include release samples, such as the subtle sound of a string being released or muted, which are played back when a note is released to help give it a natural and realistic ending. Even these release samples could have round-robin variations of their own![/aside]

If your timing is inconsistent between performances, you can always quantize the notes. Quantization in MIDI refers to adjusting the performance data to the nearest selectable time value, be it whole note, half note, quarter note, etc. While quantizing can help tighten up your performance, it is also a main contributor to your composition sounding mechanical and unnatural. While you don’t want to be off by a whole beat, tiny imperfections in timing is natural with human performance and can help your music sound more convincing. Some DAW sequencers in addition to a unit of timing will let you choose a degree of quantization, in other words how forceful do you want to be when pushing your note data toward that fixed value. This will let you maintain some of the feel of your actual performance.

Most sequencers also have a collection of other MIDI processing functions – e.g., randomization. You can select a group of notes that you’ve quantized, and tell it to randomize the starting position and duration of the note (as well as other parameters such as note velocity) by some small amount, introducing some of these timing imperfections back into the performance. These processes are ironically sometimes known as humanization functions, which nevertheless can come in handy when polishing up a digital music composition.

When actual musicians play their instruments, particularly longer sustained notes, they typically don’t play them at a constant level and timbre. They could be doing this in response to a marking of dynamics in the score (such as a crescendo), or they may simply be expressing their own style and interpretation of the music. Differences in the way notes are played also result from simple human factors. One trumpet player pushes the air a little harder than another to get a note started or to end a long note when he’s running out of breath. The bowing motion on a stringed instrument varies slightly as the bow moves back and forth, and differs from one musician to another. Only a machine can produce a note in exactly the same way every time. These nuances aren’t captured in timing or note velocity information, but some samplers can respond to MIDI control data in order to achieve these more dynamic performances. As the name might imply, an important one of these is MIDI controller 11, expression. Manipulating the expression value is a great way to add a natural, continuous arc to longer sustained notes, and it’s a great way to simulate dynamic swells as well as variations in string bowing. In many cases, controller 11 simply affects the volume of the playback, although some samplers are programmed to respond to it in a more dynamic way. Many MIDI keyboards have an expression pedal input, allowing you to control the expression with a variable foot pedal. If your keyboard has knobs or faders, you can also set these to write expression data, or you can always draw it in to your sequencer software by hand with a mouse or track pad.

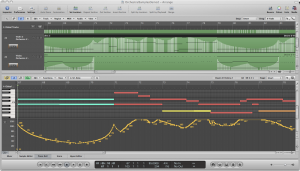

An example of expression data captured into a MIDI sequencer region can be seen in Figure 8.17. MIDI controller 1, the modulation wheel, is also often linked to a variable element of the sampler instrument. In many cases, it will default as a vibrato control for the sound, either by applying a simple LFO to the pitch, or even dynamically crossfading with a recorded vibrato sample. When long sustained notes are played or sung live, a note pitch may start out normally, but, as the note goes on, an increasing amount of vibrato may be applied, giving it a nice fullness. Taking another pass over your music performance with the modulation wheel in hand, you can bring in varying levels of vibrato at appropriate times, enhancing the character and natural feel of your composition.

[wpfilebase tag=file id=142 tpl=supplement /]

As you can see, getting a great piece of music out of your digital composition isn’t always as simple as playing a few notes on your MIDI keyboard. With all of the nuanced control available in today’s sampler systems, crafting even a single instrument part can require careful attention to detail and is often an exercise in patience. Perhaps hiring a live musician is sounding much better at the moment? Certainly, when you have access to the real deal, it is often a simpler and more effective way to create a piece of music. Yet if you enjoy total control over their production and are deeply familiar and experienced with your digital music production toolset, virtual instruments can be a fast and powerful means of bringing your music to life.