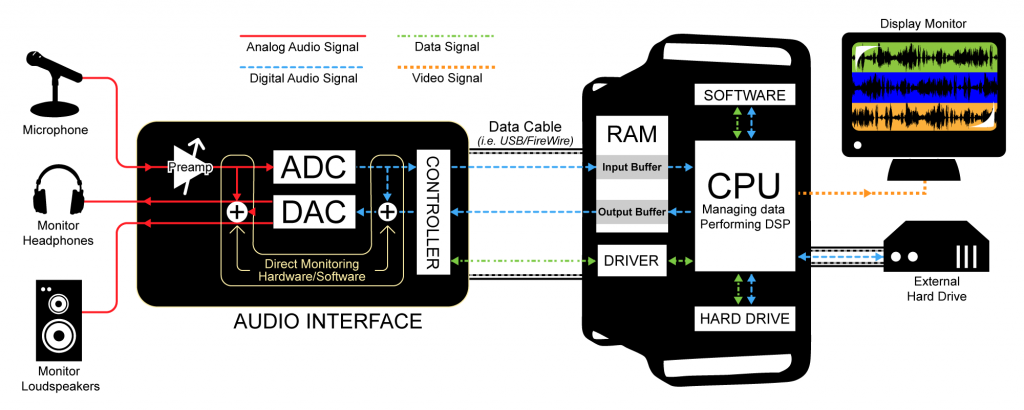

In Section 5.1.4, we looked at the digital audio signal path during recording. A close look at this signal path shows how delays can occur in between input and output of the audio signal, and how such delays can be minimized.

[wpfilebase tag=file id=120 tpl=supplement /]

Latency is the period of time between when an audio signal enters a system and when the sound is output and can be heard. Digital audio systems introduce latency problems not present in analog systems. It takes time for a piece of digital equipment to process audio data, time that isn’t required in fully analog systems where sound travels along wires as electric current at nearly the speed of light. An immediate source of latency in a digital audio system arises from analog-to-digital and digital-to-analog conversions. Each conversion adds latency on the order of milliseconds to your system. Another factor influencing latency is buffer size. The input buffer must fill up before the digitized audio data is sent along the audio stream to output. Buffer sizes vary by your driver and system, but a size of 1024 samples would not be usual, so let’s use that as an estimate. At a sampling rate of 44.1 kHz , it would take about 23 ms to fill a buffer with 1024 samples, as shown below.

$$!\frac{1\, sec}{44,100\, samples}\ast 1024\, samples\approx 23\: ms$$

Thus, total latency including the time for ADC, DAC, and buffer-filling is on the order of milliseconds. A few milliseconds of delay may not seem very much, but when multiple sounds are expected to be synchronized when they arrive at the listener, this amount of latency can be a problem, resulting in phase offsets and echoes.

Let’s consider a couple of scenarios in which latency can be a problem, and then look at how the problem can be dealt with. Imagine a situation where a singer is singing live on stage. Her voice is taken in by the microphone and undergoes digital processing before it comes out the loudspeakers. In this case, the sound is not being recorded, but there’s latency nonetheless. Any ADC/DAC conversions and audio processing along the signal path can result in an audible delay between when a singer sings into a microphone and when the sound from the microphone radiates out of a loudspeaker. In this situation, the processed sound arrives at the audience’s ears after the live sound of the singer’s voice, resulting in an audible echo. The simplest way to reduce the latency here is to avoid analog-to-digital and digital-to-analog conversions whenever possible. If you can connect two pieces of digital sound equipment using a digital signal transmission instead of an analog transmission, you can cut your latency down by at least two milliseconds because you’ll have eliminated two signal conversions.

Buffer size contributes to latency as well. Consider a scenario in which a singer’s voice is being digitally recorded (Figure 5.20). When an audio stream is captured in a digital device like a computer, it passes through an input buffer. This input buffer must be large enough to hold the audio samples that are coming in while the CPU is off somewhere else doing other work. When a singer is singing into a microphone, audio samples are being collected at a fixed rate – say 44,100 samples per second. The singer isn’t going to pause her singing and the sound card isn’t going to slow down the number of samples it takes per second just because the CPU is busy. If the input buffer is too small, samples have to be dropped or overwritten because the CPU isn’t there in time to process them. If the input buffer is sufficiently large, it can hold the samples that accumulate while the CPU is busy, but the amount of time it takes to fill up the buffer is added to the latency.

The singer herself will be affected by this latency is she’s listening to her voice through headphones as her voice is being digitally recorded (called live sound monitoring). If the system is set up to use software monitoring, the sound of the singer’s voice enters the microphone, undergoes ADC and then some processing, is converted back to analog, and reaches the singer’s ears through the headphones. Software monitoring requires one analog-to-digital and one digital-to-analog conversion. Depending on the buffer size and amount of processing done, the singer may not hear herself back in the headphones until 50 to 100 milliseconds after she sings. Even an untrained ear will perceive this latency as an audible echo, making it extremely difficult to sing on beat. If the singer is also listening to a backing track played directly from the computer, the computer will deliver that backing track to the headphones sooner than it can deliver the audio coming in live to the computer. (A backing track is a track that has already been recorded and is being played while the singer sings.)

[wpfilebase tag=file id=121 tpl=supplement /]

Latency in live sound monitoring can be avoided by hardware monitoring (also called direct monitoring). Hardware monitoring splits the newly digitized signal before sending it into the computer, mixing it directly into the output and eliminating the longer latencies caused by analog-to-digital conversion and input buffers (Figure 5.25). The disadvantage of hardware monitoring is that the singer cannot hear her voice with processing such as reverb applied. (Audio interfaces that offer direct hardware monitoring with zero-latency generally let you control the mix of what’s coming directly from the microphone and what’s coming from the computer. That’s the purpose of the monitor mix knob, circled in red in Figure 5.24.) When the mix knob is turned fully counterclockwise, only the direct input signals (e.g., from the microphone) are heard. When the mix knob is turned fully counterclockwise, only the signal from the DAW software is heard.

In general, the way to reduce latency caused by buffer size is to use the most efficient driver available for your system. In Windows systems, ASIO drivers are a good choice. ASIO drivers cut down on latency by allowing your audio application program to speak directly to the sound card, without having to go through the operating system. Once you have the best driver in place, you can check the interface to see if the driver gives you any control over the buffer size. If you’re allowed to adjust the size, you can find the optimum size mostly by trial and error. If the buffer is too large, the latency will be bothersome. If it’s too small, you’ll hear breaks in the audio because the CPU may not be able to return quickly enough to empty the buffer, and thus audio samples are dropped out.

With dedicated hardware systems (digital audio equipment as opposed to a DAW based on your desktop or laptop computer) you don’t usually have the ability to change the buffer size because those buffers have been fixed at the factory to match perfectly the performance of the specific components inside that device. In this situation, you can reduce the latency of the hardware by increasing your internal sampling rate. If this seems to hard for you to do you can get Laptop Repairs – Fix It Home Computer Repairs Brisbane. This may seem counterintuitive at first because a higher sampling rate means that you’re processing more data per second. This is true, but remember that the buffer sizes have been specifically set to match the performance capabilities of that hardware, so if the hardware gives you an option to run at a higher sampling rate, you can be confident that the system is capable of handling that speed without errors or dropouts. For a buffer of 1024 samples, a sampling rate of 192 kHz has a latency of about 5.3 ms, as shown below.

$$!1024\, samples\ast \frac{1}{192,000\, samples}\approx 5.3\, ms$$

If you can increase your sampling rate, you won’t necessarily get a better sound from your system, but the sound is delivered with less latency.

2 comments

Comments are closed.